Application log analytics pipeline

Centralized Logging with OpenSearch supports log analysis for application logs, such as NGINX/Apache HTTP Server logs or custom application logs.

Note

Centralized Logging with OpenSearch supports cross-account log ingestion. If you want to ingest logs from the same account, the resources in the Sources group will be in the same account as your Centralized Logging with OpenSearch account. Otherwise, they will be in another AWS account.

Logs from Amazon EC2 / Amazon EKS

Centralized Logging with OpenSearch supports collecting logs from Amazon EC2 instances or Amazon EKS clusters. The workflow supports two scenarios.

Scenario 1: Using OpenSearch Engine

Application log pipeline architecture for EC2/EKS

The log pipeline runs the following workflow:

-

Fluent Bit works as the underlying log agent to collect logs from application servers and send them to an optional Log Buffer, or ingest into OpenSearch domain directly.

-

The Log Buffer invokes the Lambda (Log Processor) to execute.

-

The log processor reads and processes the log records and ingests the logs into the OpenSearch domain.

-

Logs that fail to be processed are exported to an Amazon S3 bucket (Backup Bucket).

Scenario 2: Using Light Engine

Application log pipeline architecture for EC2/EKS

The log pipeline runs the following workflow:

-

Fluent Bit works as the underlying log agent to collect logs from application servers and send them to a Log Bucket.

-

An event notification is sent to Amazon SQS using S3 Event Notifications when a new log file is created.

-

Amazon SQS initiates AWS Lambda to execute.

-

AWS Lambda load the log file from the Log Bucket.

-

AWS Lambda put the log file to the Staging Bucket.

-

The Log Processor, AWS Step Functions, processes raw log files stored in the staging bucket in batches.

-

The Log Processor converts raw log files to Apache Parquet format and automatically partitions all incoming data based on criteria including time and Region.

Logs from Amazon S3

Centralized Logging with OpenSearch supports collecting logs from Amazon S3 buckets. The workflow supports three scenarios:

Scenario 1: Using OpenSearch Engine (Ongoing)

In this scenario, the solutions continuously read and parse logs whenever you upload a log file to the specified Amazon S3 location.

Application log pipeline architecture for Amazon S3

The log pipeline runs the following workflow:

-

User uploads logs to an Amazon S3 bucket (Log Bucket).

-

An event notification is sent to Amazon SQS using S3 Event Notifications when a new log file is created.

-

Amazon SQS initiates AWS Lambda (Log Processor) to execute.

-

The Log Processor reads and processes log files.

-

The Log Processor ingests the processed logs into the OpenSearch domain.

-

Logs that fail to be processed are exported to an Amazon S3 bucket (Backup Bucket).

Scenario 2: Using OpenSearch Engine (One-time)

In this scenario, the solution scans existing log files stored in the specified Amazon S3 location and ingests them into the log analytics engine in a single operation.

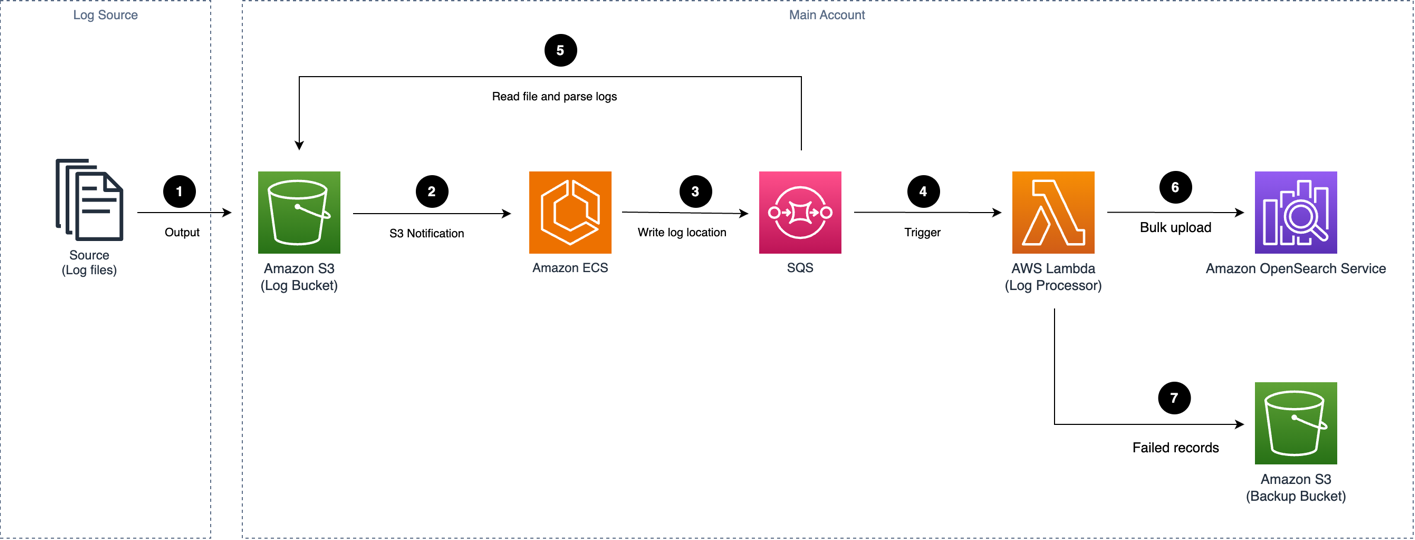

Application log pipeline architecture for Amazon S3

The log pipeline runs the following workflow:

-

User uploads logs to an Amazon S3 bucket (Log Bucket).

-

Amazon ECS Task iterates log files in the Log Bucket.

-

Amazon ECS Task sends the log location to an Amazon SQS queue.

-

Amazon SQS initiates AWS Lambda to execute.

-

The Log Processor reads and parses log files.

-

The Log Processor ingests the processed logs into the OpenSearch domain.

-

Logs that fail to be processed are exported to an Amazon S3 bucket (Backup Bucket).

Scenario 3: Using Light Engine (Ongoing)

Application log pipeline architecture for Amazon S3

The log pipeline runs the following workflow:

-

Logs are uploaded to an Amazon S3 bucket (Log bucket).

-

An event notification is sent to Amazon SQS using S3 Event Notifications when a new log file is created.

-

Amazon SQS initiates AWS Lambda.

-

AWS Lambda copies objects from the Log bucket.

-

AWS Lambda output the copied objects to the Staging bucket.

-

AWS Step Functions periodically trigger Log Processor to process raw log files stored in the staging bucket in batches.

-

The Log Processor converts them into Apache Parquet format and automatically partitions all incoming data based on criteria including time and Region.

Logs from Syslog Client

Important

-

Make sure your Syslog generator/sender's subnet is connected to Centralized Logging with OpenSearch' two private subnets. You may need to use VPC Peering Connection or Transit Gateway to connect these VPCs.

-

The Network Load Balancer together with the Amazon ECS containers in the architecture diagram will be provisioned only when you create a Syslog ingestion and be automated deleted when there is no Syslog ingestion.

Scenario 1: Using OpenSearch Engine

Application log pipeline architecture for Syslog

-

Syslog client (like Rsyslog

) sends logs to a Network Load Balancer in Centralized Logging with OpenSearch's private subnets, and the Network Load Balancer routes to the Amazon ECS containers running Syslog servers. -

Fluent Bit

works as the underlying log agent in the Amazon ECS service to parse logs, and send them to an optional Log Buffer, or ingest into OpenSearch domain directly. -

The Log Buffer triggers the Lambda (Log Processor) to run.

-

The log processor reads and processes the log records and ingests the logs into the OpenSearch domain.

-

Logs that fail to be processed are exported to an Amazon S3 bucket (Backup Bucket).

Scenario 2: Using Light Engine

Application log pipeline architecture for Syslog

-

Syslog client (like Rsyslog) send logs to a Network Load Balancer in Centralized Logging with OpenSearch's private subnets, and the Network Load Balancer routes to the Amazon ECS containers running Syslog servers.

-

Fluent Bit works as the underlying log agent in the Amazon ECS Service to parse logs, and send them to an optional Log Buffer, or ingest into OpenSearch domain directly.

-

An event notification is sent to Amazon SQS using S3 Event Notifications when a new log file is created.

-

Amazon SQS initiates AWS Lambda.

-

AWS Lambda copies objects from the Log bucket.

-

AWS Lambda output the copied objects to the Staging bucket

-

AWS Step Functions periodically trigger Log processor to process raw log files stored in the staging bucket in batches.

-

The Log Processor converts them into Apache Parquet format and automatically partitions all incoming data based on criteria including time and Region.