Create an evaluation form with a title in Amazon Connect

In Amazon Connect, you can create many different evaluation forms. For example, you may need a different evaluation form for each business unit and type of interaction. Each form can contain multiple sections and questions. You can assign weights to each question and section to indicate how much their score impacts the agent's total score. You can also configure automation on each question, so that answers to those questions are automatically filled using insights and metrics from Contact Lens conversational analytics.

This topic explains how to create a form configure automation using the Amazon Connect admin website. To create and manage forms programmatically, see Evaluation actions in the Amazon Connect API Reference.

Contents

Step 1: Create an evaluation form with a title

The following steps explain how to create or duplicate an evaluation form and set a title.

-

Log in to Amazon Connect with a user account that has permissions to create evaluation forms.

-

Choose Analytics and optimization, then choose Evaluation forms.

-

On the Evaluation forms page, choose Create new form.

—or—

Select an existing form and choose Duplicate.

-

Enter a title for the form, such as

Sales evaluation, or change the existing title. When finished, choose Ok.

The following tabs appear at the top of the evaluation form page:

-

Sections and questions. Add sections, questions, and answers to the form.

-

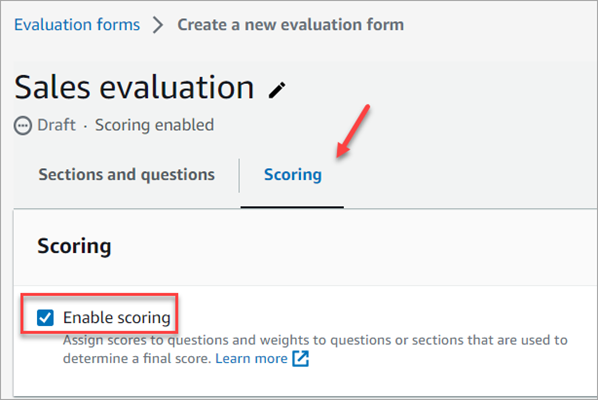

Scoring. Enable scoring on the form. You can also apply scoring to sections or questions.

-

-

Choose Save at any time while creating your form. This enables you to navigate away from the page and return to the form later.

-

Continue to the next step to add sections and questions.

Step 2: Add sections and questions

-

While on the Sections and questions tab, add a title to the section 1, for example, Greeting.

-

Choose Add question to add a question.

-

In the Question title box, enter the question that will appear on the evaluation form. For example, Did the agent state their name and say they are here to assist?

-

In the Instructions to evaluators box, add information to help the evaluators or generative AI to answer the question. For example, for the question Did the agent try to validate the customer identity? you may provide additional instructions such as, The agent is required to always ask a customer their membership ID and postal code before addressing the customer’s questions.

-

In the Question type box, choose one of the following options to appear on the form:

-

Single selection: The evaluator can choose from a list of options, such as Yes, No, or Good, Fair, Poor.

-

Text field: The evaluator can enter free form text.

-

Number: The evaluator can enter a number from a range that you specify, such as 1-10.

-

-

Continue to the next step to add answers.

Step 3: Add answers

-

On the Answers tab, add answer options that you want to display to evaluators, such as Yes, No.

-

To add more answers, choose Add option.

The following image shows example answers for a Single selection question.

The following image shows an answer range for a Number question.

-

You can also mark a question as optional. This enables managers to skip the question (or mark it as Not applicable") while performing an evaluation.

-

When you're finished adding answers, continue to the next step to enable scoring, and add ranges for scoring Number answers.

Step 4: Assign scores and ranges to answers

-

Go to the top of the form. Choose the Scoring tab, and then choose Enable scoring.

This enables scoring for the entire form. It also enables you to add ranges for answers to Number question types.

-

Return to the Sections and questions tab. Now you have the option to assign scores to Single selection, and add ranges for Number question types.

-

When you create a Number type question, on the Scoring tab, choose Add range to enter a range of values. Indicate the worst to best score for the answer.

The following image shows an example of ranges and scoring for a Number question type.

-

If the agent interrupted the customer 0 times, they get a score of 10 (best).

-

If the agent interrupted the customer 1-4 times, they get a score of 5.

-

If the agent interrupted the customer 5-10 times, they get a score of 1 (worst).

Important

If you assign a score of Automatic fail to a question, the entire evaluation form is assigned a score of 0. The Automatic fail option is shown in the following image.

-

-

After you assign scores to all the answers, choose Save.

-

When you're finished assigning scores, continue to the next step to automate the question of certain questions, or continue to preview the evaluation form.

Step 5: Enable automated evaluations

Contact Lens enables you to automatically answer questions within evaluation forms (for example, did the agent adhere to the greeting script?) using insights and metrics from conversational analytics. Automation can be used to:

-

Assist evaluators with performance evaluations: Evaluators are provided with automated answers to questions on evaluation forms while performing evaluations. Evaluators can override automated answers before submission.

-

Automatically fill and submit evaluations: Administrators can configure evaluation forms to automate responses to all questions within an evaluation form and automatically submit evaluations for up to 100% of agents’ customer interactions. Evaluators can edit and re-submit evaluations (if needed).

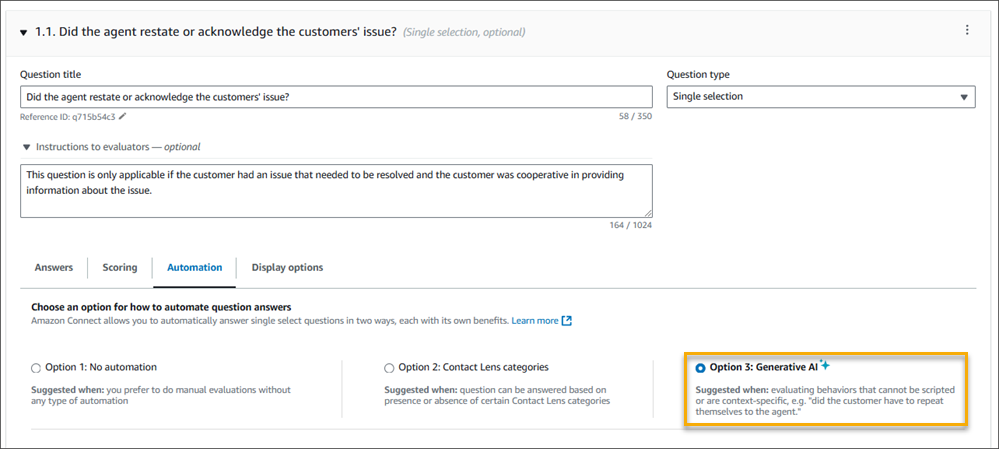

For both scenarios, you need to first setup automation on individual questions within an evaluation form. Contact Lens provides 3 ways of automating evaluations:

-

Contact Lens categories: Single selection questions (for example, did the agent properly greet the customer (Yes/ No)?), can be automatically answered using categories defined with Contact Lens rules. For more information, see Create Contact Lens rules using the Amazon Connect admin website.

-

Generative A: Both Single selection and Text field questions can be automatically answered using generative AI.

-

Metrics: Numeric questions (for example, what was the longest that the customer was put on hold?) can be automatically answered using metrics such as longest hold time, sentiment score, etc.

Following are examples of each type of automation for each type of question.

Example automation for a Single selection question using Contact Lens categories

-

The following image shows that the answer to the evaluation question is yes when Contact Lens has categorized the contact with a label ProperGreeting. To label contacts as ProperGreeting, you must first setup a rule that detects the words or phrases expected as part of a proper greeting, for example, the agent mentioned "Thank you for calling" in the first 30 seconds of the interaction. For more information, see Automatically categorize contacts.

For information about setting up Contact Lens categories, see Automatically categorize contacts.

Example automation for an optional Single selection question using Contact Lens categories

-

The following image shows example automation of an optional Single selection question. The first check is whether the question is applicable or not. A rule is created to check whether the contact is about opening a new account. If so, the contact is categorized as CallReasonNewAccountOpening. If the call is not about opening a new account, the question is marked as Not Applicable.

The subsequent conditions run only if the question is applicable. The answer is marked as Yes or No based on the Contact Lens category NewAccountDisclosures. This category checks whether the agent provided the customer with disclosures about opening a new account.

For information about setting up Contact Lens categories, see Automatically categorize contacts.

Example automation for an optional Single selection question using Generative AI

-

The following image show example automation using Generative AI. Generative AI will automatically answer the evaluation question by interpreting the question title and evaluation criteria specified in the instructions of the evaluation question, and using it to analyze the conversation transcript. Using complete sentences to phrase the evaluation question and clearly specifying the evaluation criteria within the instructions improves accuracy of generative AI. For information, see Evaluate agent performance in Amazon Connect using generative AI.

Example automation for a Numeric question

-

If the agent interaction duration was less than 30 seconds, score the question as a 10.

-

On the Automation tab, choose the metric that is used to automatically evaluate the question.

-

You can automate responses to numeric questions using Contact Lens metrics (such as sentiment score of the customers, non-talk time percentage, and number of interruptions) and contact metrics (such as longest hold duration, number of holds, and agent interaction duration).

After an evaluation form is activated with automation configured on some of the questions, then you will receive automated responses to those questions when you start an evaluation from within the Amazon Connect admin website.

To automatically fill and submit evaluations

-

Set up automation on every question within an evaluation form as previously described.

-

Turn on Enable fully automated submission of evaluations before activating the evaluation form. This toggle is shown in the following image.

-

Activate the evaluation form.

-

Upon activation you will be asked to create a rule in Contact Lens that submits an automated evaluation. For more information, see Create a rule in Contact Lens that submits an automated evaluation. The rule enables you to specify which contacts should be automatically evaluated using the evaluation form.

Step 6: Preview the evaluation form

The Preview button is active only after you have assigned scores to answers for all of the questions.

The following image shows the form preview. Use the arrows to collapse sections and make the form easier to preview. You can edit the form while viewing the preview, as shown in the following image.

Step 7: Assign weights for final score

When scoring is enabled for the evaluation form, you can assign weights to sections or questions. The weight raises or lowers the impact of a section or question on the final score of the evaluation.

Weight distribution mode

With Weight distribution mode, you choose whether to assign weight by section or question:

-

Weight by section: You can evenly distribute the weight of each question in the section.

-

Weight by question: You can lower or raise the weight of specific questions.

When you change a weight of a section or question, the other weights are automatically adjusted so the total is always 100 percent.

For example, in the following image, question 2.1 was manually set to 50 percent. The weights that display in italics were adjusted automatically. In addition, you can turn on Exclude optional questions from scoring, which assigns all optional questions a weight of zero and redistributes the weight among the remaining questions.

Step 8: Activate an evaluation form

Choose Activate to make the form available to evaluators. Evaluators will no longer be able to choose the previous version of the form from the dropdown list when starting new evaluations. For any evaluations that were completed using previous versions, you will still be able to view the version of the form on which the evaluation was based on.

If you are still working on setting up the evaluation form and want to save your work at any point you can choose Save, Save draft.

If you want to check whether the form has been correctly set up, but not activate it, select Save, Save and validate.