Aiutaci a migliorare questa pagina

Le traduzioni sono generate tramite traduzione automatica. In caso di conflitto tra il contenuto di una traduzione e la versione originale in Inglese, quest'ultima prevarrà.

Per contribuire a questa guida per l'utente, scegli il GitHub link Modifica questa pagina nel riquadro destro di ogni pagina.

Le traduzioni sono generate tramite traduzione automatica. In caso di conflitto tra il contenuto di una traduzione e la versione originale in Inglese, quest'ultima prevarrà.

Kubernetes dispone di funzionalità native che consentono di rendere le applicazioni più resistenti a eventi come il deterioramento dello stato di salute o il deterioramento di una zona di disponibilità (AZ). Quando esegui i carichi di lavoro in un cluster Amazon EKS, puoi migliorare ulteriormente la tolleranza agli errori e il ripristino delle applicazioni dell'ambiente applicativo utilizzando lo spostamento zonale o lo spostamento automatico di zona di Amazon Application Recovery Controller (ARC). Lo spostamento zonale ARC è progettato per essere una misura temporanea che consente di spostare il traffico di una risorsa lontano da una zona di zona compromessa fino alla scadenza del turno zonale o alla sua cancellazione. Se necessario, è possibile estendere lo spostamento zonale.

Puoi avviare uno spostamento zonale per un cluster EKS oppure puoi consentire di farlo AWS per te abilitando lo spostamento automatico zonale. Questa modifica aggiorna il flusso del traffico di east-to-west rete nel cluster in modo da prendere in considerazione solo gli endpoint di rete per i Pod in esecuzione sui nodi di lavoro in condizioni di integrità. AZs Inoltre, qualsiasi ALB o NLB che gestisca il traffico in ingresso per le applicazioni del cluster EKS indirizzerà automaticamente il traffico verso gli obiettivi integri. AZs Per i clienti che cercano gli obiettivi di disponibilità più elevati, nel caso in cui una zona AZ venga compromessa, può essere importante essere in grado di indirizzare tutto il traffico verso la zona interessata fino a quando non si ripristina. Per questo, puoi anche abilitare un ALB o NLB con spostamento zonale ARC.

Comprensione del flusso di traffico di rete est-ovest tra i pod

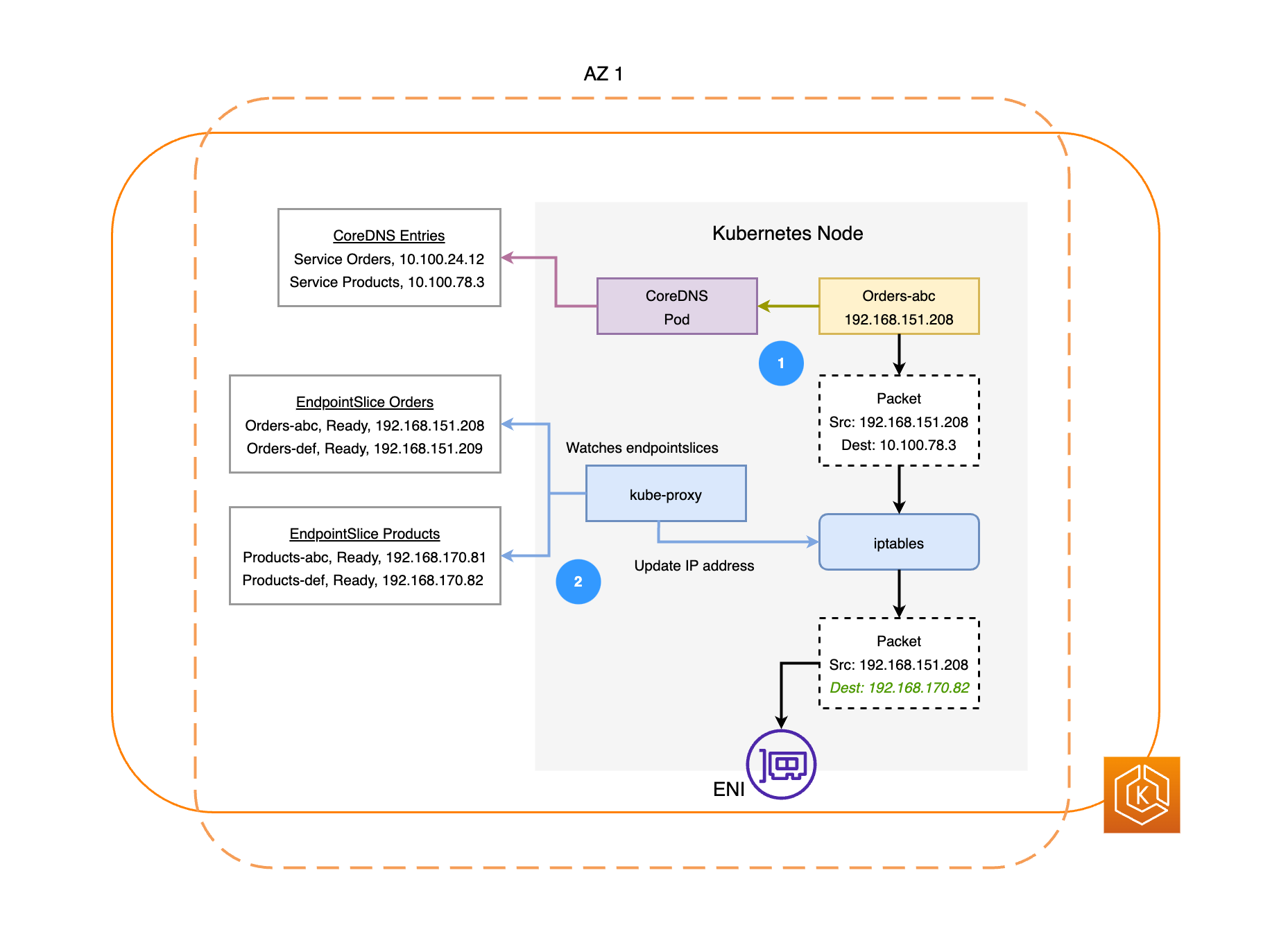

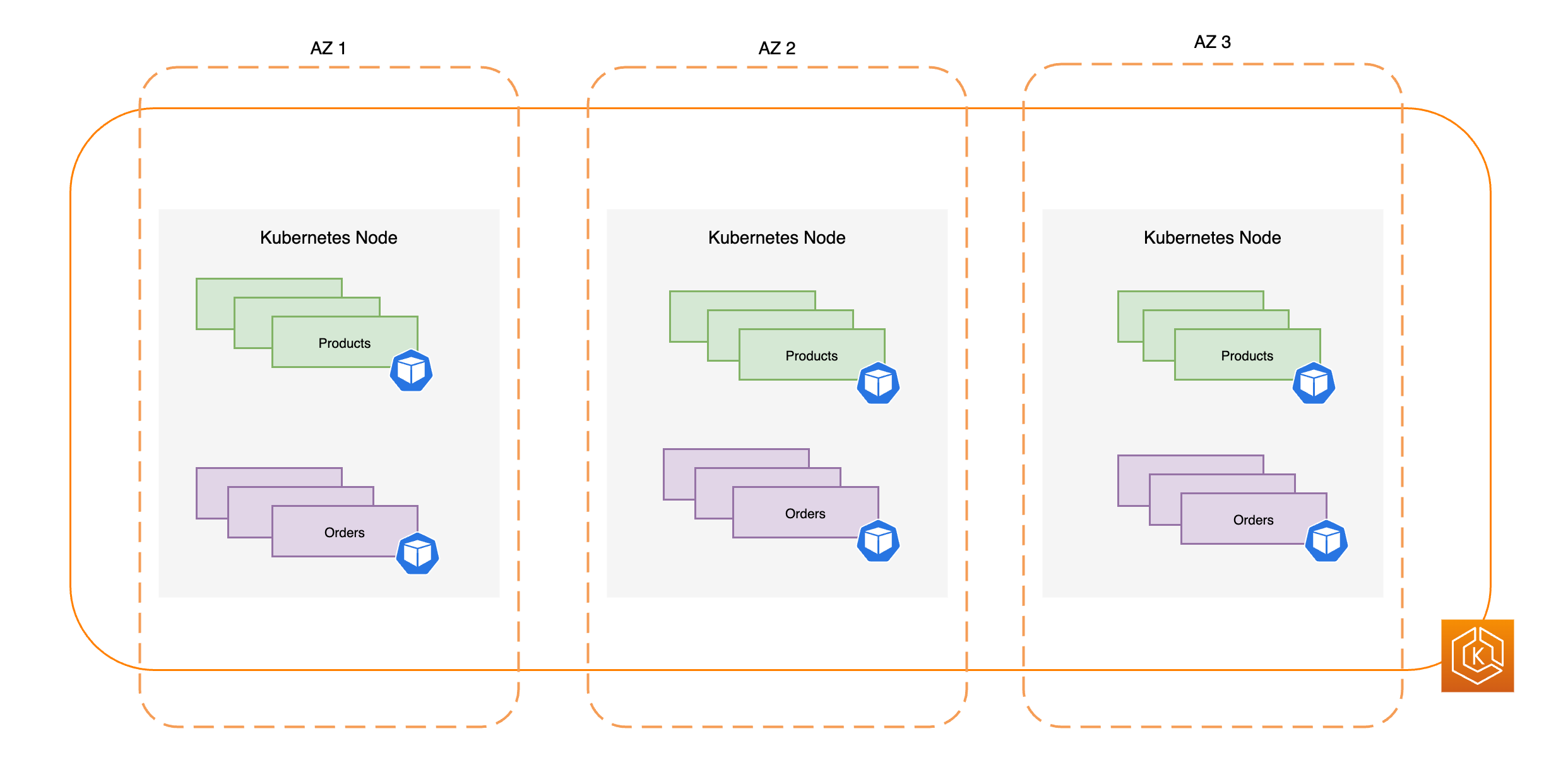

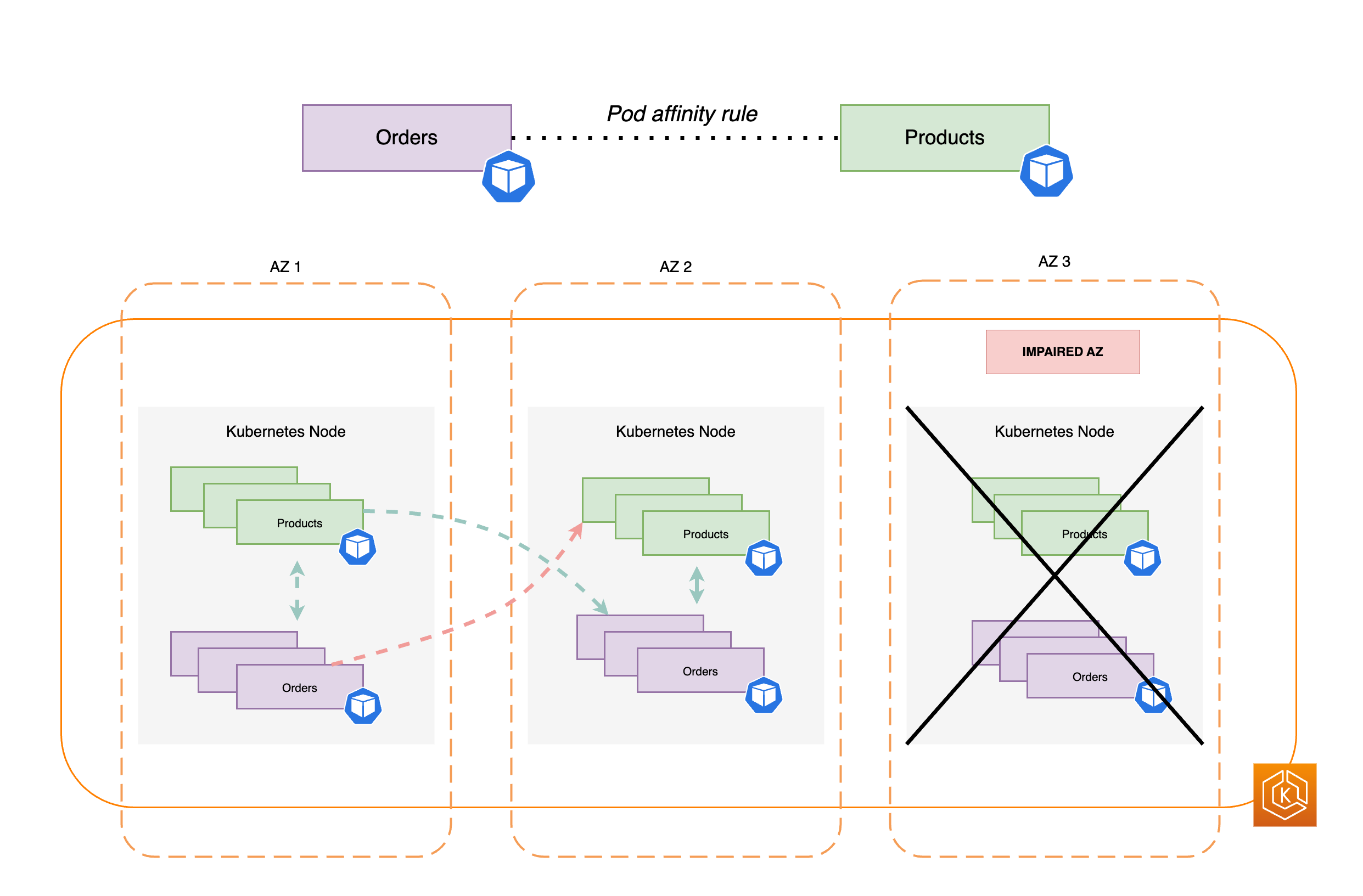

Il diagramma seguente illustra due esempi di carichi di lavoro, Ordini e Prodotti. Lo scopo di questo esempio è mostrare come comunicano carichi di lavoro e Pod diversi. AZs

-

Affinché gli Ordini comunichino con i Prodotti, devono prima risolvere il nome DNS del servizio di destinazione. Gli ordini comunicheranno con CoredNS per recuperare l'indirizzo IP virtuale (IP del cluster) per quel servizio. Una volta che Orders risolve il nome del servizio Products, invia il traffico a quell'IP di destinazione.

-

Il kube-proxy viene eseguito su ogni nodo del cluster e controlla continuamente i servizi. EndpointSlices

Quando viene creato un servizio, un servizio EndpointSlice viene creato e gestito in background dal controller. EndpointSlice Ciascuno EndpointSlice ha un elenco o una tabella di endpoint contenente un sottoinsieme di indirizzi Pod insieme ai nodi su cui sono in esecuzione. Il kube-proxy imposta le regole di routing per ciascuno di questi endpoint Pod utilizzando sui nodi. iptablesIl kube-proxy è anche responsabile di una forma base di bilanciamento del carico, reindirizzando il traffico destinato all'IP del cluster di un servizio per inviarlo invece direttamente all'indirizzo IP di un Pod. Il kube-proxy esegue questa operazione riscrivendo l'IP di destinazione sulla connessione in uscita. -

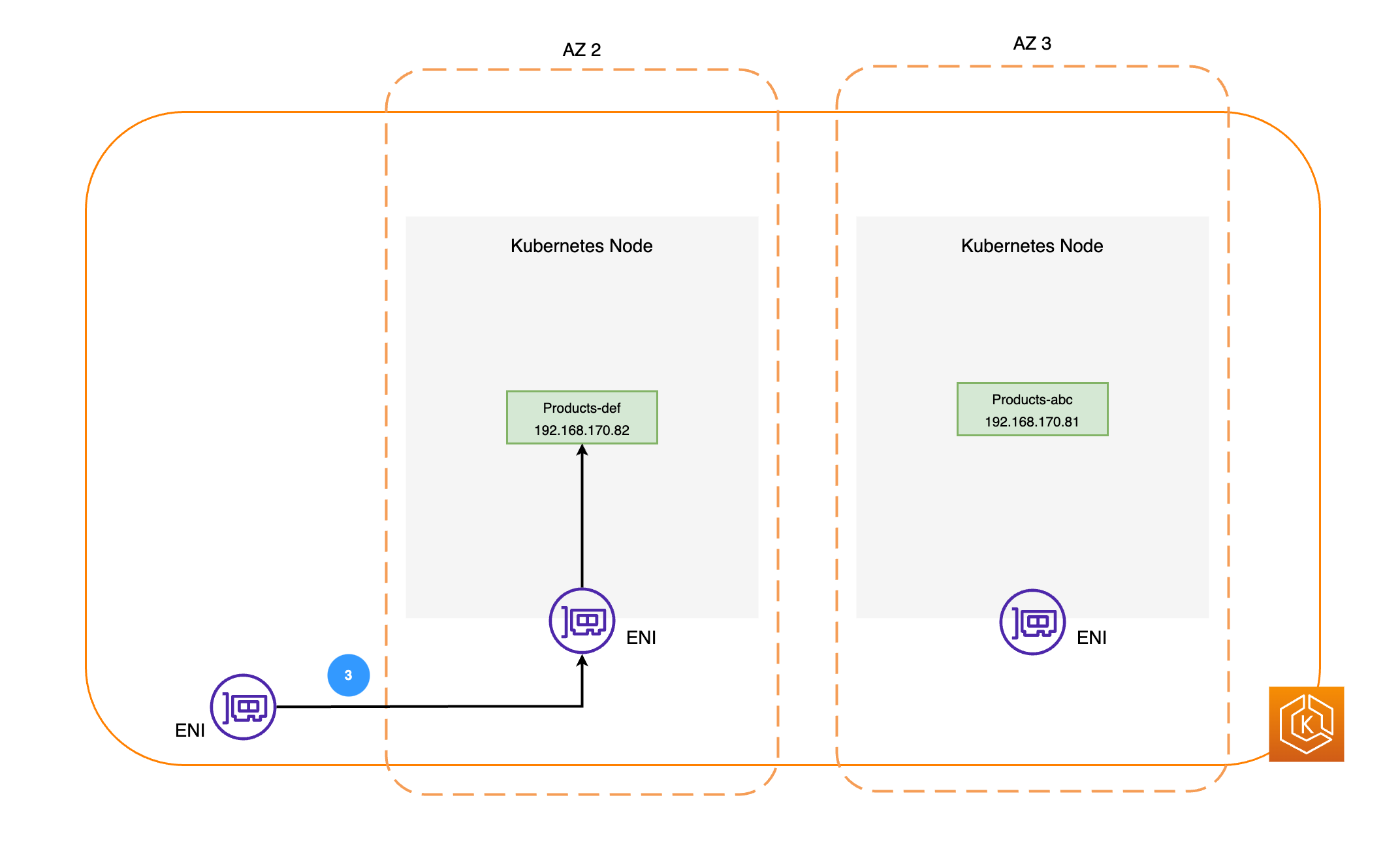

I pacchetti di rete vengono quindi inviati al Products Pod in AZ 2 tramite i rispettivi nodi (come ENIs illustrato nel diagramma precedente).

Informazioni su ARC Zonal Shift in Amazon EKS

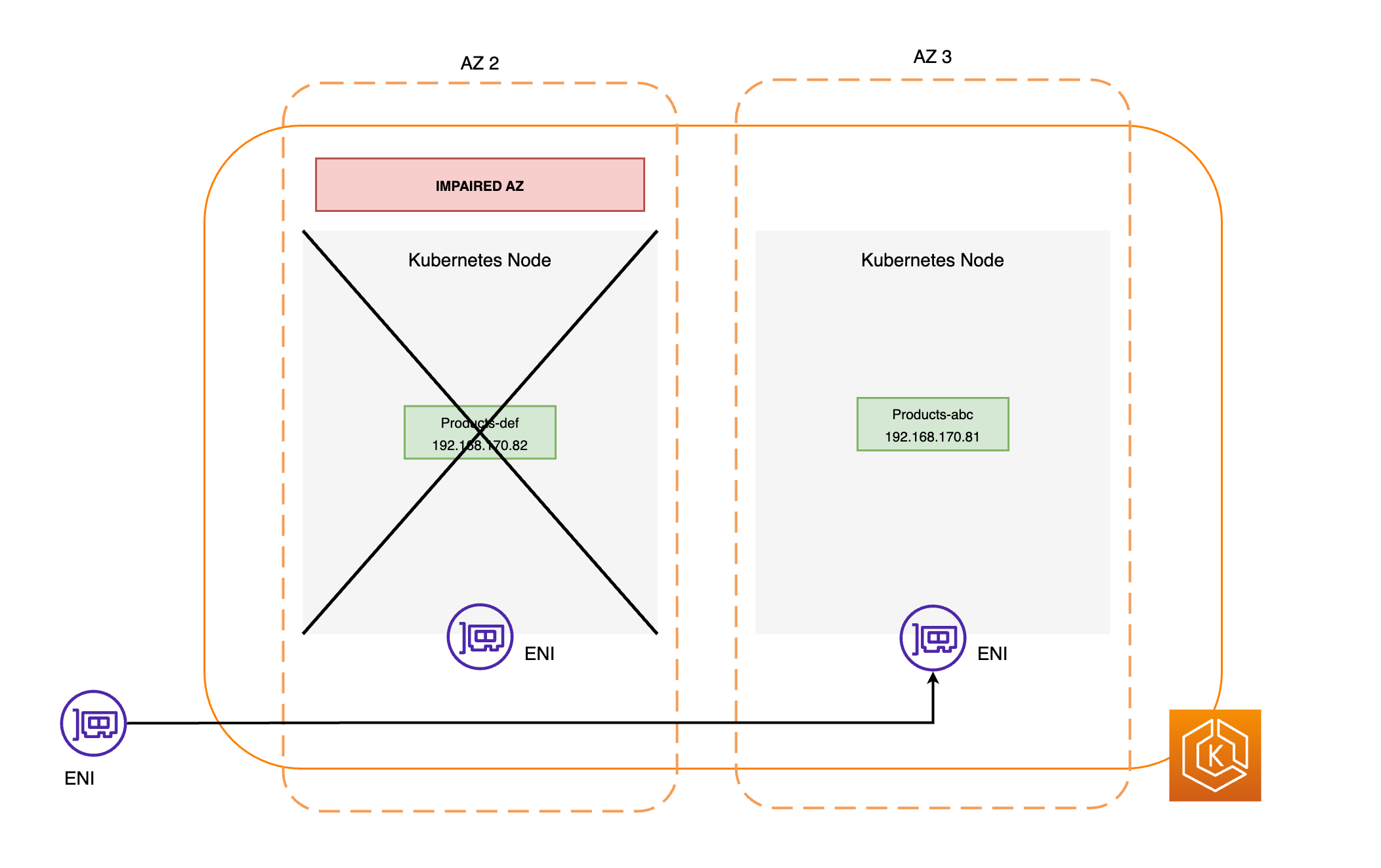

Nel caso in cui si verifichi un problema di AZ nel tuo ambiente, puoi avviare uno spostamento di zona per il tuo ambiente di cluster EKS. In alternativa, puoi consentire di gestirlo AWS per te con l'autoshift zonale. Con l'autoshift zonale, AWS monitorerà lo stato generale della zona Z e risponderà a un potenziale danno alla zona Z spostando automaticamente il traffico dall'area AZ compromessa nell'ambiente del cluster.

Una volta che il cluster Amazon EKS ha abilitato lo spostamento zonale con ARC, puoi attivare uno spostamento zonale o abilitare lo spostamento automatico di zona utilizzando la console ARC, la AWS CLI o lo spostamento zonale e lo spostamento automatico zonale. APIs Durante uno spostamento zonale EKS, avverrà automaticamente quanto segue:

-

Tutti i nodi della zona AZ interessata verranno isolati. Ciò impedirà a Kubernetes Scheduler di pianificare nuovi Pod sui nodi della zona AZ non integra.

-

Se utilizzi Managed Node Groups, il ribilanciamento delle zone di disponibilità verrà sospeso e il tuo Auto Scaling Group (ASG) verrà aggiornato per garantire che i nuovi nodi EKS Data Plane vengano lanciati solo nell'ambiente integro. AZs

-

I nodi della zona AZ non integra non verranno terminati e i Pod non verranno rimossi da questi nodi. Questo serve a garantire che, quando un turno di zona scade o viene annullato, il traffico possa essere reindirizzato in tutta sicurezza alla zona AZ, che ha ancora la piena capacità

-

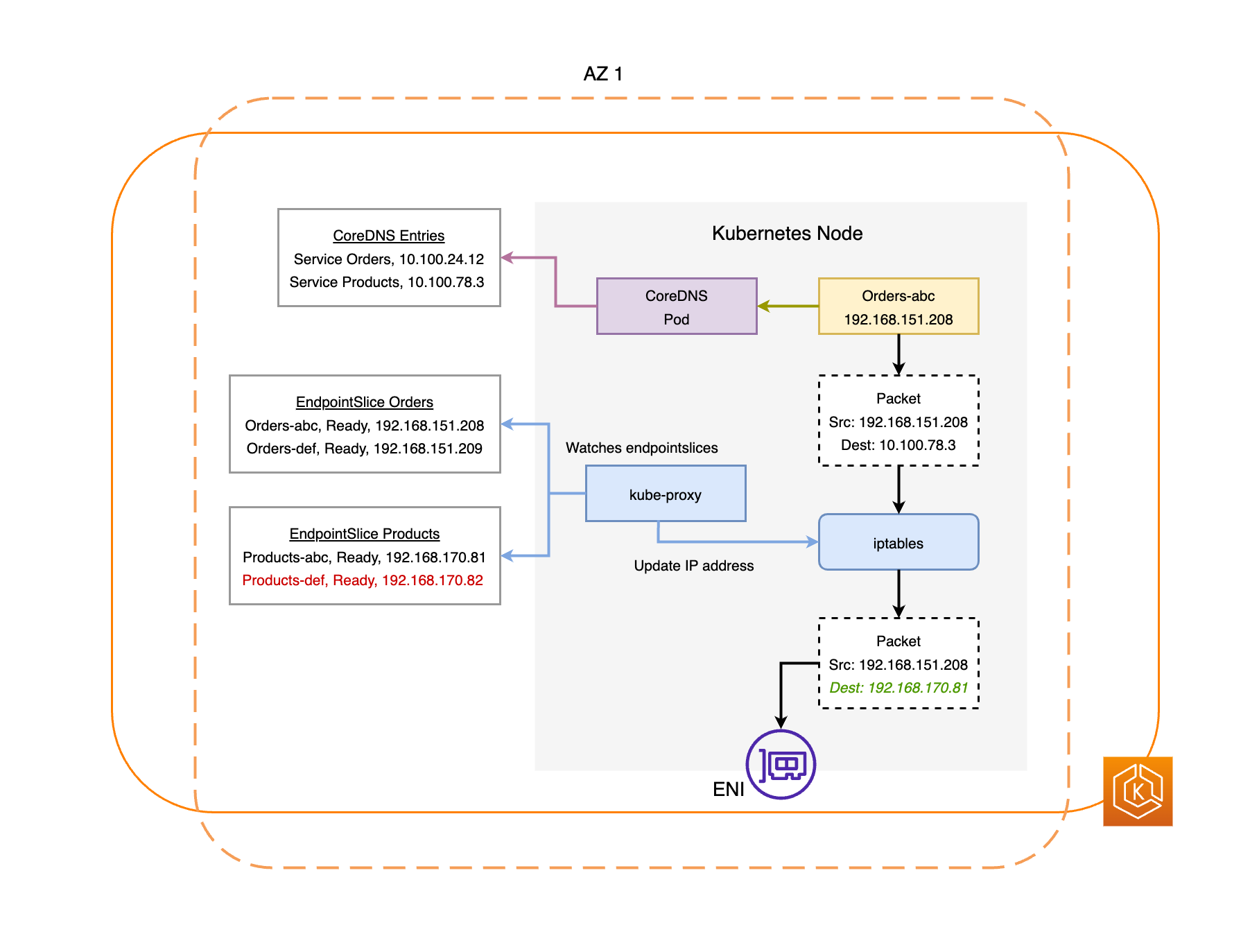

Il EndpointSlice controller troverà tutti gli endpoint Pod nella zona AZ compromessa e li rimuoverà dai relativi terminali. EndpointSlices Ciò garantirà che solo gli endpoint Pod integri AZs siano destinati a ricevere il traffico di rete. Quando un cambiamento di zona viene annullato o scade, il EndpointSlice controller lo aggiornerà EndpointSlices per includere gli endpoint nella AZ ripristinata.

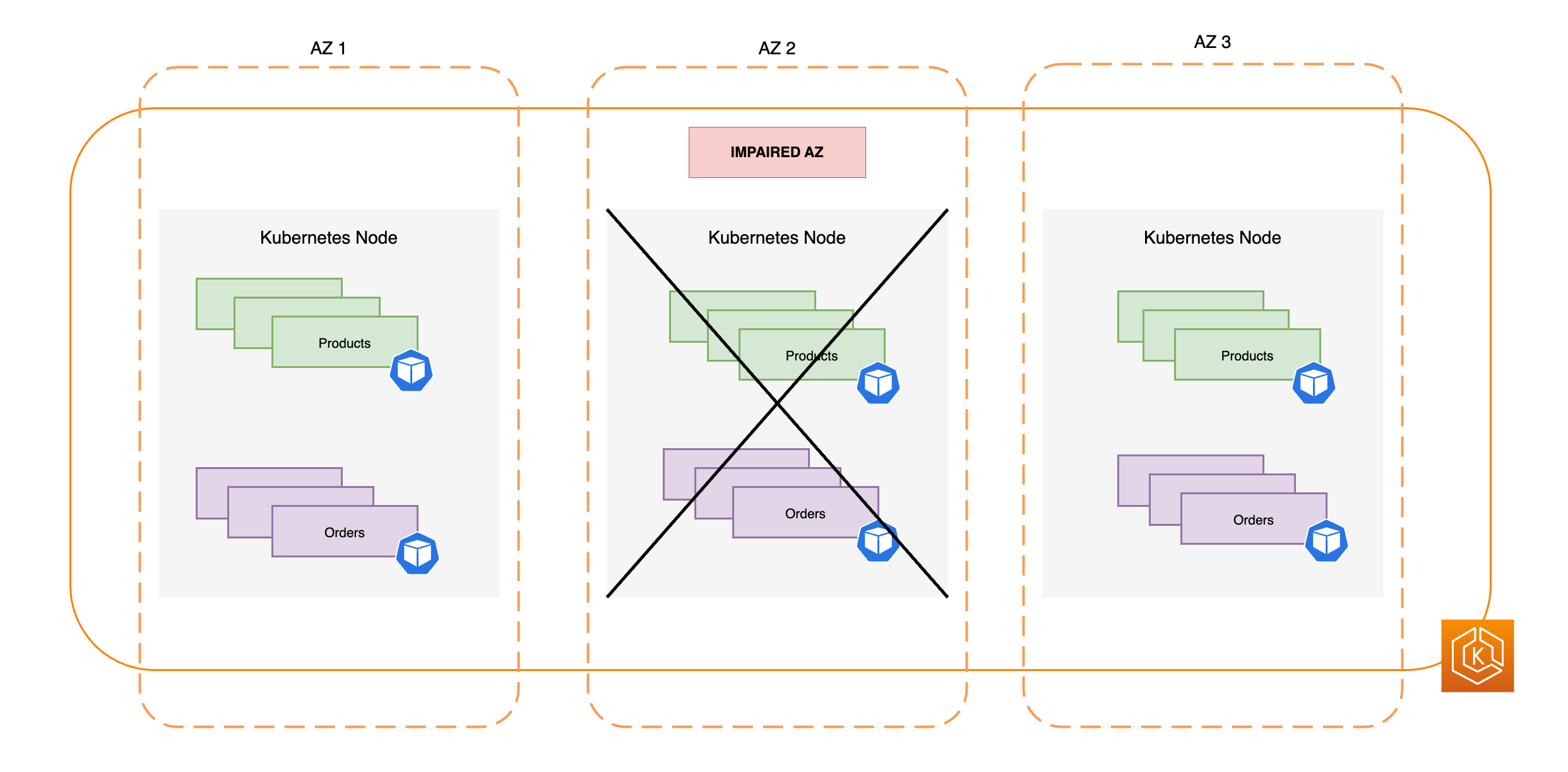

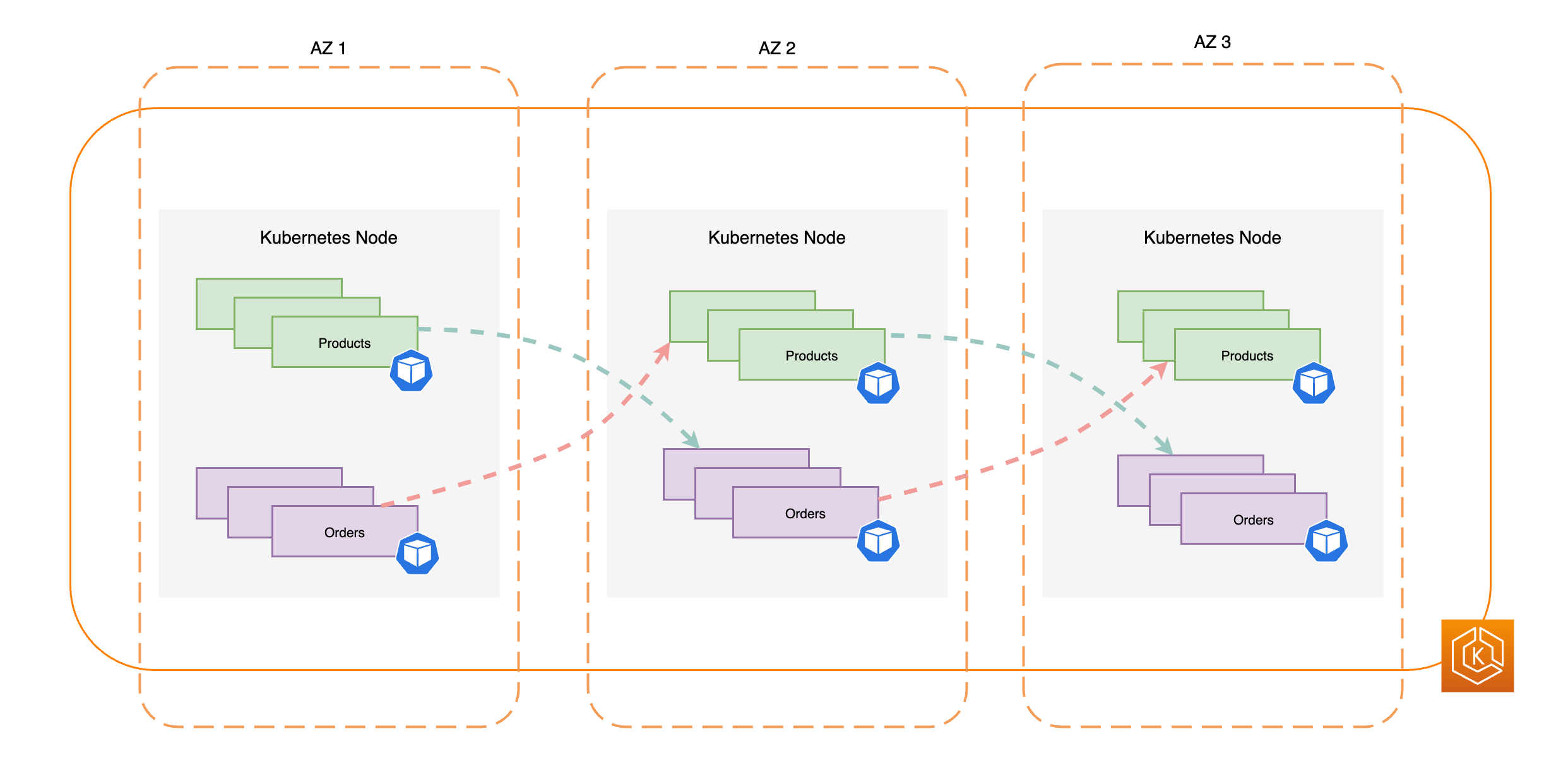

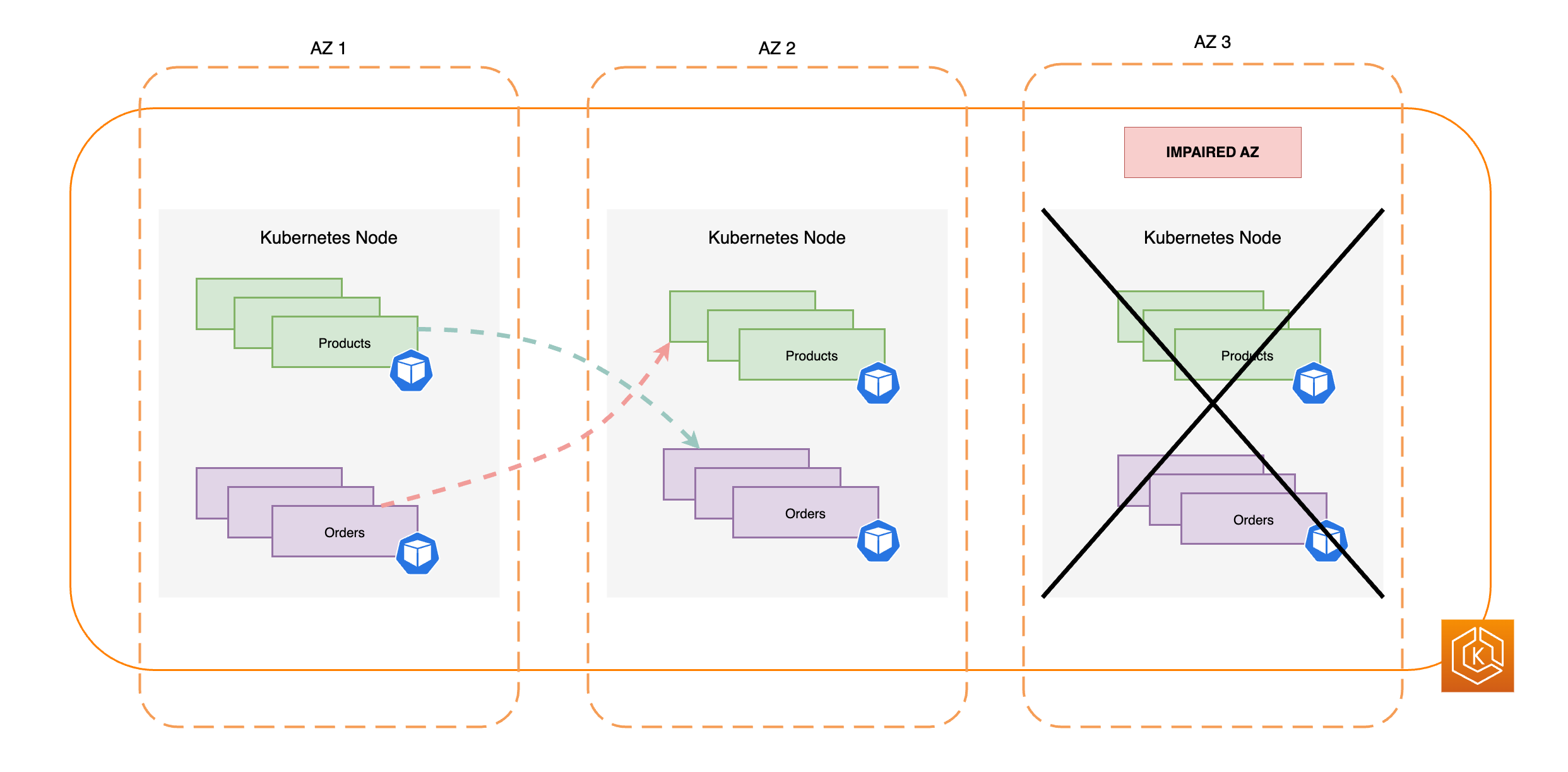

I diagrammi seguenti illustrano un flusso di alto livello del modo in cui EKS zonal shift garantisce che solo gli endpoint Pod sani vengano presi di mira nell'ambiente del cluster.

Requisiti EKS Zonal Shift

Affinché il trasferimento zonale funzioni correttamente in EKS, è necessario configurare preventivamente l'ambiente del cluster in modo che sia resiliente a una compromissione della zona di competenza. Di seguito è riportato un elenco dei passaggi da seguire.

-

Effettua il provisioning dei nodi di lavoro del cluster su più nodi AZs

-

Fornisci una capacità di elaborazione sufficiente per resistere alla rimozione di una singola AZ

-

Predimensiona i tuoi Pod (incluso CoredNS) in ogni zona

-

Distribuisci più repliche Pod su tutti AZs per assicurarti che il passaggio da una singola AZ ti lasci una capacità sufficiente

-

Colloca i Pod interdipendenti o correlati nella stessa AZ

-

Verifica che il tuo ambiente cluster funzioni come previsto con una AZ in meno avviando manualmente uno spostamento zonale. In alternativa, puoi abilitare lo spostamento automatico zonale e rispondere alle esercitazioni di trasferimento automatico. Questo non è necessario per consentire ai turni zonali di lavorare in EKS, ma è fortemente consigliato.

Esegui il provisioning dei tuoi nodi EKS Worker su più nodi AZs

AWS Le regioni hanno più sedi separate con data center fisici noti come zone di disponibilità (AZs). AZs sono progettate per essere isolate fisicamente l'una dall'altra per evitare impatti simultanei che potrebbero interessare un'intera regione. Quando si effettua il provisioning di un cluster EKS, è necessario distribuire i nodi di lavoro su più AZs nodi in una regione. Ciò renderà l'ambiente del cluster più resiliente ai danni di una singola AZ e consentirà di mantenere l'elevata disponibilità (HA) delle applicazioni in esecuzione nell'altra. AZs Quando iniziate un cambiamento di zona rispetto alla zona di zona interessata, la rete interna al cluster del vostro ambiente EKS si aggiornerà automaticamente per utilizzarla solo in modo corretto AZs, mantenendo al contempo un livello di elevata disponibilità per il cluster.

Garantire una tale configurazione Multi-AZ per l'ambiente EKS migliorerà l'affidabilità complessiva del sistema. Tuttavia, gli ambienti Multi-AZ possono svolgere un ruolo significativo nel modo in cui i dati delle applicazioni vengono trasferiti ed elaborati, il che a sua volta avrà un impatto sui costi di rete dell'ambiente. In particolare, il traffico in uscita frequente tra le zone (traffico distribuito tra le zone AZs) può avere un impatto importante sui costi relativi alla rete. È possibile applicare diverse strategie per controllare la quantità di traffico interzona tra i Pod del cluster EKS e ridurre i costi associati. Consulta questa guida alle best practice

Il diagramma seguente illustra un ambiente EKS ad alta disponibilità con 3 ambienti integri. AZs

Il diagramma seguente mostra come un ambiente EKS con 3 AZs sia resiliente a una compromissione della AZ e rimanga altamente disponibile grazie agli altri 2 sistemi integri. AZs

Fornisci una capacità di elaborazione sufficiente per resistere alla rimozione di una singola AZ

Per ottimizzare l'utilizzo delle risorse e i costi per l'infrastruttura di elaborazione nell'EKS Data Plane, è consigliabile allineare la capacità di elaborazione ai requisiti del carico di lavoro. Tuttavia, se tutti i nodi di lavoro sono a piena capacità, è necessario aggiungere nuovi nodi di lavoro all'EKS Data Plane prima di poter pianificare nuovi Pod. Quando si eseguono carichi di lavoro critici, in genere è sempre consigliabile utilizzare una capacità ridondante online per gestire eventualità quali aumenti improvvisi del carico, problemi di integrità dei nodi, ecc. Se prevedi di utilizzare Zonal Shift, intendi rimuovere un'intera AZ di capacità, quindi devi adattare la capacità di elaborazione ridondante in modo che sia sufficiente a gestire il carico anche con una AZ offline.

Quando si ridimensiona l'elaborazione, il processo di aggiunta di nuovi nodi al piano dati EKS richiederà del tempo, il che può avere implicazioni sulle prestazioni e sulla disponibilità in tempo reale delle applicazioni, soprattutto in caso di problemi zonali. Il vostro ambiente EKS deve essere resiliente per assorbire il carico derivante dalla perdita di una AZ ed evitare un'esperienza degradata per gli utenti finali o i clienti. Ciò significa ridurre al minimo o eliminare qualsiasi ritardo tra il momento in cui è necessario un nuovo Pod e il momento in cui è effettivamente pianificato su un nodo di lavoro.

Inoltre, in caso di compromissione della zona, è necessario mitigare il rischio di un potenziale limite di capacità di elaborazione che impedirebbe l'aggiunta di nuovi nodi necessari al piano dati EKS quando sono integri. AZs

A tal fine, è necessario fornire in eccesso la capacità di elaborazione in alcuni nodi di lavoro di ciascuno di essi, in AZs modo che Kubernetes Scheduler disponga di capacità preesistente disponibile per nuovi posizionamenti di Pod, soprattutto quando si dispone di una AZ in meno nell'ambiente.

Esegui e distribuisci più repliche di pod su più AZs

Kubernetes ti consente di pre-scalare i carichi di lavoro eseguendo più istanze (repliche Pod) di una singola applicazione. L'esecuzione di più repliche Pod per un'applicazione elimina un singolo punto di errore e ne aumenta le prestazioni complessive riducendo il carico di risorse su una singola replica. Tuttavia, per garantire un'elevata disponibilità e una migliore tolleranza agli errori per le applicazioni, in questo caso è consigliabile eseguire e distribuire più repliche di un'applicazione su diversi domini di errore (denominati anche domini di topologia). AZs Grazie ai vincoli di diffusione della topologia

Il diagramma seguente illustra un ambiente EKS con flusso di traffico quando tutto è in buono stato. east-to-west AZs

Il diagramma seguente illustra un ambiente EKS con flusso di east-to-west traffico quando una singola AZ si guasta e si avvia uno spostamento di zona.

Il frammento di codice riportato di seguito è un esempio di come configurare il carico di lavoro con questa funzionalità di Kubernetes.

apiVersion: apps/v1

kind: Deployment

metadata:

name: orders

spec:

replicas: 9

selector:

matchLabels:

app:orders

template:

metadata:

labels:

app: orders

tier: backend

spec:

topologySpreadConstraints:

- maxSkew: 1

topologyKey: "topology.kubernetes.io/zone"

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

app: ordersSoprattutto, è necessario eseguire più repliche del software del server DNS (CoreDNS/Kube-DNS) e applicare vincoli di diffusione della topologia simili se non sono già configurati per impostazione predefinita. Ciò contribuirà a garantire la presenza di un numero sufficiente di pod DNS integri per continuare a gestire le richieste di rilevamento del servizio AZs per altri Pod comunicanti nel cluster in caso di una singola compromissione della zona di disponibilità. Il componente aggiuntivo CoreDNS EKS dispone di impostazioni predefinite per i CoredNS Pods da distribuire nelle zone di disponibilità del cluster se ci sono più nodi disponibili. AZs È inoltre possibile sostituire queste impostazioni predefinite con configurazioni personalizzate.

Quando si installa CoredNS conreplicaCount aggiornarlo nel file values.yamltopologySpreadConstraints Il frammento di codice riportato di seguito mostra come configurare CoredNS per questo scopo.

CoredNS Helm values.yaml

replicaCount: 6

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

k8s-app: kube-dnsIn caso di problemi di AZ, è possibile assorbire l'aumento del carico sui CoredNS Pods utilizzando un sistema di scalabilità automatica per CoredNS. Il numero di istanze DNS necessarie dipenderà dal numero di carichi di lavoro in esecuzione nel cluster. CoredNS è legato alla CPU, il che gli consente di scalare in base alla CPU utilizzando Horizontal Pod Autoscaler

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: coredns

namespace: default

spec:

maxReplicas: 20

minReplicas: 2

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: coredns

targetCPUUtilizationPercentage: 50In alternativa, EKS può gestire la scalabilità automatica di CoreDNS Deployment nella versione aggiuntiva EKS di CoreDNS. Questo autoscaler CoredNS monitora continuamente lo stato del cluster, incluso il numero di nodi e core della CPU. Sulla base di tali informazioni, il controller adatterà dinamicamente il numero di repliche dell'implementazione CoredNS in un cluster EKS.

Per abilitare la configurazione di scalabilità automatica nel componente aggiuntivo CoredNS EKS, è necessario aggiungere le seguenti impostazioni di configurazione opzionali:

{

"autoScaling": {

"enabled": true

}

}Puoi anche usare NodeLocal DNS

Colloca i pod interdipendenti nella stessa AZ

Nella maggior parte dei casi, è possibile che stiate eseguendo carichi di lavoro distinti che devono comunicare tra loro per eseguire correttamente un processo. end-to-end Se le diverse applicazioni sono distribuite su aree diverse AZs ma non sono collocate nella stessa zona di zona, una singola area di disponibilità può influire sul processo sottostante. end-to-end Ad esempio, se l'applicazione A ha più repliche in AZ 1 e AZ 2, ma l'applicazione B ha tutte le repliche in AZ 3, la perdita di AZ 3 influirà su tutti end-to-end i processi tra questi due carichi di lavoro (applicazione A e B). La combinazione dei vincoli di diffusione della topologia con l'affinità dei pod può migliorare la resilienza dell'applicazione distribuendo i Pod su tutti AZs, oltre a configurare una relazione tra determinati Pod per garantire che siano collocati insieme.

Con le regole di affinità dei pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: products

namespace: ecommerce

labels:

app.kubernetes.io/version: "0.1.6"

spec:

serviceAccountName: graphql-service-account

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- orders

topologyKey: "kubernetes.io/hostname"Il diagramma seguente mostra i pod che sono stati collocati contemporaneamente sullo stesso nodo utilizzando le regole di affinità dei pod.

Verifica che il tuo ambiente cluster sia in grado di gestire la perdita di una zona di disponibilità

Dopo aver completato i requisiti di cui sopra, il passaggio successivo importante consiste nel verificare di disporre di una capacità di elaborazione e carico di lavoro sufficiente per gestire la perdita di una zona di disponibilità. Puoi farlo attivando manualmente uno spostamento di zona in EKS. In alternativa, è possibile abilitare lo spostamento automatico zonale e configurare le sessioni pratiche per verificare che le applicazioni funzionino come previsto con una AZ in meno nell'ambiente del cluster.

Domande frequenti

Perché dovrei usare questa funzionalità?

Utilizzando ARC zonal shift o zonal autoshift nel cluster EKS, è possibile mantenere meglio la disponibilità delle applicazioni Kubernetes automatizzando il processo di ripristino rapido che consiste nello spostamento del traffico di rete interno al cluster da una zona AZ compromessa. Con ARC, è possibile evitare passaggi lunghi e complicati che spesso portano a un periodo di recupero prolungato in caso di eventi AZ compromessi.

Come funziona questa funzionalità con altri AWS servizi?

EKS si integra con ARC, che fornisce l'interfaccia principale in cui eseguire le operazioni di ripristino. AWS Per garantire che il traffico all'interno del cluster venga indirizzato in modo appropriato lontano da una zona di disponibilità compromessa, vengono apportate modifiche all'elenco degli endpoint di rete per i Pod in esecuzione sul piano dati Kubernetes. Se utilizzi AWS Load Balancer per instradare il traffico esterno verso il cluster, puoi già registrare i sistemi di bilanciamento del carico con ARC e attivare uno spostamento zonale su di essi per impedire che il traffico fluisca verso la zona degradata. Questa funzionalità interagisce anche con Amazon EC2 Auto Scaling Groups (ASG) creati da EKS Managed Node Groups (MNG). Per evitare che una AZ compromessa venga utilizzata per nuovi Kubernetes Pods o lanci di nodi, EKS rimuove la AZ compromessa dall'ASG.

In che modo questa funzionalità è diversa dalle protezioni Kubernetes predefinite?

Questa funzionalità funziona in combinazione con diverse protezioni integrate native di Kubernetes che aiutano i clienti a rimanere resilienti. Puoi configurare le sonde Pod Readiness e Liveness che decidono quando un Pod deve ricevere traffico. Quando queste sonde si guastano, Kubernetes rimuove questi Pod come destinazioni per un servizio e il traffico non viene più inviato al Pod. Sebbene ciò sia utile, non è banale per i clienti configurare questi controlli di integrità in modo che abbiano la garanzia di fallire quando una zona viene degradata. La funzione ARC zonal shift offre una rete di sicurezza aggiuntiva che li aiuta a isolare completamente una zona di zona degradata quando le protezioni native di Kubernetes non sono sufficienti. Fornisce inoltre un modo semplice per testare la prontezza operativa e la resilienza della propria architettura.

Posso AWS attivare un cambiamento di zona per mio conto?

Sì, se desideri un modo completamente automatizzato di utilizzare lo spostamento zonale ARC, puoi abilitare lo spostamento automatico zonale ARC. Con l'autoshift zonale, puoi fare affidamento sul AWS monitoraggio dello stato del tuo cluster EKS e AZs per attivare automaticamente uno spostamento quando viene rilevata una compromissione della zona Z.

Cosa succede se utilizzo questa funzionalità e i miei nodi di lavoro e i miei carichi di lavoro non sono prescalati?

Se non si utilizza la scalabilità predefinita e si affida al provisioning di nodi o pod aggiuntivi durante un cambio di zona, si rischia di subire un ritardo nel ripristino. Il processo di aggiunta di nuovi nodi al piano dati di Kubernetes richiederà del tempo, il che può avere implicazioni sulle prestazioni e sulla disponibilità in tempo reale delle applicazioni, soprattutto in caso di problemi zonali. Inoltre, in caso di compromissione zonale, potresti riscontrare un potenziale limite di capacità di elaborazione che impedirebbe l'aggiunta dei nuovi nodi necessari a quelli integri. AZs

Se i carichi di lavoro non sono prescalati e distribuiti su tutto il cluster, un AZs problema zonale può influire sulla disponibilità di un'applicazione che viene eseguita solo sui nodi di lavoro nelle zone di lavoro interessate. Per mitigare il rischio di una completa interruzione della disponibilità dell'applicazione, EKS dispone di un sistema fail-safe per inviare il traffico agli endpoint Pod in una zona compromessa se il carico di lavoro ha tutti gli endpoint in una zona non funzionante. Tuttavia, si consiglia vivamente di predimensionare e distribuire le applicazioni su tutte le aree AZs per mantenere la disponibilità in caso di problemi a livello di zona.

Cosa succede se sto eseguendo un'applicazione stateful?

Se stai eseguendo un'applicazione con stato, dovrai valutarne la tolleranza agli errori in base al caso d'uso e all'architettura. Se si dispone di un'architettura o di un pattern attivo/standby, è possibile che in alcuni casi l'active si trovi in una zona AZ compromessa. A livello di applicazione, se lo standby non è attivato, è possibile che si verifichino problemi con l'applicazione. Potresti inoltre riscontrare problemi quando i nuovi Kubernetes Pod vengono lanciati in modalità integra, AZs poiché non saranno in grado di collegarsi ai volumi persistenti limitati alla AZ compromessa.

Questa funzionalità funziona con Karpenter?

Il supporto di Karpenter non è attualmente disponibile con ARC zonal shift e zonal autoshift in EKS. Se una AZ è compromessa, puoi modificare la NodePool configurazione di Karpenter pertinente rimuovendo la AZ non integra in modo che i nuovi nodi di lavoro vengano lanciati solo in quella integra. AZs

Questa funzionalità è compatibile con EKS Fargate?

Questa funzione non è disponibile con EKS Fargate. Per impostazione predefinita, quando EKS Fargate riconosce un evento sanitario zonale, i Pods preferiranno essere eseguiti nell'altra. AZs

Il piano di controllo Kubernetes gestito da EKS ne risentirà?

No, per impostazione predefinita Amazon EKS esegue e ridimensiona il piano di controllo Kubernetes su più piani AZs per garantire un'elevata disponibilità. ARC zonal shift e zonal autoshift agiranno solo sul piano dati Kubernetes.

Ci sono dei costi associati a questa nuova funzionalità?

È possibile utilizzare ARC zonal shift e zonal autoshift nel cluster EKS senza costi aggiuntivi. Tuttavia, continuerai a pagare per le istanze assegnate e si consiglia vivamente di pre-scalare il piano dati Kubernetes prima di utilizzare questa funzionalità. È necessario considerare il giusto equilibrio tra costi e disponibilità delle applicazioni.